How to process gigantic files in Golang — Fast and Memory-Efficient

A practical guide with code for handling millions of lines using Go’s concurrency and object pooling.

Why Golang is a good choice for very huge files?

- Efficient Memory Usage : Go allows reading files in chunks (e.g., using bufio or io.Reader) so you don’t have to load everything into RAM.

- Concurrency Support : Goroutines and channels let you process parts of the file in parallel, speeding up tasks like filtering, transformation, or computation.

- Strong Standard Library : The os, io, bufio, and encoding packages provide tools to process files efficiently.

- Static Typing & Performance : Faster and safer than scripting languages for processing large volumes of data.

Sample project

As developers, we often face the challenge of processing very large files without consuming too much memory or CPU — because fewer resources means lower costs 🤑. In this post, I’ll show you how to handle huge files efficiently in Go, line by line, using concurrency and memory reuse.

Project Structure

├── dummy_100000000_rows.csv

├── generate.sh

├── main.go

├── model.go

├── processor.go

Model

The model.go defines reusable data structures (Payload and Chunk) and uses sync.Pool to efficiently manage their memory, minimizing allocations and garbage collection when processing large amounts of data.

package main

import "sync"

type Payload struct {

Data []byte

}

type Chunk struct {

payloads []*Payload

}

var payloadPool = sync.Pool{

New: func() interface{} {

return &Payload{}

},

}

var chunkPool = sync.Pool{

New: func() interface{} {

return &Chunk{

payloads: make([]*Payload, 0),

}

},

}

func getPayload() *Payload {

return payloadPool.Get().(*Payload)

}

func releasePayload(payload *Payload) {

payload.Data = payload.Data[:0]

payloadPool.Put(payload)

}

func getChunk() *Chunk {

return chunkPool.Get().(*Chunk)

}

func releaseChunk(chunk *Chunk) {

chunk.payloads = chunk.payloads[:0]

chunkPool.Put(chunk)

}

Processor

The processor.go reads a large file using buffered scanning, groups lines into chunks, and processes them concurrently using a worker pool. It leverages buffered channels and memory pools to efficiently manage data flow and minimize allocations, making it ideal for fast, low-overhead processing of large files.

package main

import (

"bufio"

"io"

"log"

"sync"

)

func processFile(file io.Reader, numWorkers int, chunkSize int) {

scanner := bufio.NewScanner(file)

payloadChan := make(chan *Chunk, numWorkers)

var wg sync.WaitGroup

for i := 0; i < numWorkers; i++ {

wg.Add(1)

go func(workerId int) {

defer wg.Done()

for chunk := range payloadChan {

for _, payload := range chunk.payloads {

// Actual processing logic goes here

// fmt.Println(string(payload.Data))

releasePayload(payload)

}

releaseChunk(chunk)

}

}(i)

}

go func() {

defer close(payloadChan)

for {

chunk := getChunk()

for len(chunk.payloads) < chunkSize && scanner.Scan() {

payload := getPayload()

payload.Data = payload.Data[:0]

payload.Data = append(payload.Data[:0], scanner.Bytes()...)

chunk.payloads = append(chunk.payloads, payload)

}

if len(chunk.payloads) > 0 {

payloadChan <- chunk

} else {

releaseChunk(chunk)

break

}

}

if err := scanner.Err(); err != nil {

log.Printf("Scanner error: %v", err)

}

}()

wg.Wait()

}

Main

The main.go is a simple command-line interface that accepts user parameters, opens the specified file, and runs the processing logic with those settings. After processing, it outputs useful information about the process, such as execution time and memory usage, to help monitor performance during large file handling.

package main

import (

"flag"

"log"

"os"

"runtime"

"time"

)

func main() {

filePath := flag.String("file", "", "Path to CSV file to process (required)")

chunkSize := flag.Int("chunk", 1000, "Number of lines per chunk (default: 1000)")

workers := flag.Int("workers", runtime.NumCPU(), "Number of concurrent workers (default: number of CPUs)")

flag.Parse()

if *filePath == "" {

log.Fatal("Missing required --file parameter")

}

startTime := time.Now()

file, err := os.Open(*filePath)

if err != nil {

log.Fatalf("Failed to open file: %v", err)

}

defer file.Close()

stat, err := file.Stat()

if err != nil {

log.Fatalf("Failed to get file stats: %v", err)

}

fileSize := stat.Size()

log.Printf("Processing file: %s (%.2f MB)", *filePath, float64(fileSize)/1024/1024)

log.Printf("Number of workers: %d", *workers)

log.Printf("Chunks size: %d", *chunkSize)

processFile(file, *workers, *chunkSize)

printMemStats()

log.Printf("Processing completed in %v", time.Since(startTime))

}

func printMemStats() {

var m runtime.MemStats

runtime.ReadMemStats(&m)

log.Printf("Memory stats: Alloc=%.2fMB, TotalAlloc=%.2fMB, Sys=%.2fMB, NumGC=%d",

float64(m.Alloc)/1024/1024,

float64(m.TotalAlloc)/1024/1024,

float64(m.Sys)/1024/1024,

m.NumGC,

)

}

CLI-supported parameters:

+------------+---------------------------------------------------+---------+-----------------------+----------+

| Flag | Description | Type | Default Value | Required |

+------------+---------------------------------------------------+---------+-----------------------+----------+

| --file | Path to the CSV file to process | string | None | Yes |

| --chunk | Number of lines per processing chunk | int | 1000 | No |

| --workers | Number of concurrent worker goroutines to use | int | Number of CPU cores | No |

+------------+---------------------------------------------------+---------+-----------------------+----------+

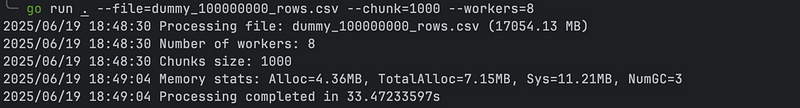

Example Usage:

go run main.go --file=dummy_10000000_rows.csv --chunk=1000 --workers=8

You will get a similar output depending on your file and computer.

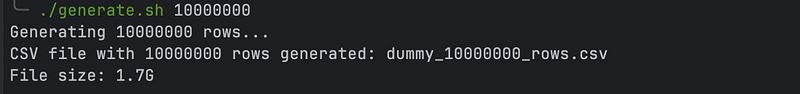

Bonus point: Generate sample data

It includes a handy simple Bash script (generate.sh) that quickly creates realistic sample data in CSV format, perfect for testing and demonstration.

#!/bin/bash

DEFAULT_ROWS=1000000

NUM_ROWS=${1:-$DEFAULT_ROWS}

FILENAME="dummy_${NUM_ROWS}_rows.csv"

echo "Generating $NUM_ROWS rows..."

echo "ID,Name,Surname,Gender,Age,City,Score,Code,Status,Date,Email,Country,Verified,Phone,IP,Browser,OS,Device,Source,Plan,Referral" > "$FILENAME"

awk -v rows="$NUM_ROWS" '

BEGIN {

srand();

split("Alice,Bob,Charlie,David,Eve,Frank,Grace,Hannah,Ian,Julia,Sam,Manuel,Francisco,Fred,Carlos", names, ",");

split("Smith,Johnson,Williams,Brown,Jones,Garcia,Miller,Davis,Martinez,Lopez,Marshall,Andres", surnames, ",");

split("Male,Female,Other", genders, ",");

split("New York,London,Berlin,Barcelona,Tokyo,Sydney,Paris,Dubai,Rome,Madrid,Beijing", cities, ",");

split("USA,UK,Germany,Japan,Australia,France,UAE,Italy,Spain,China", countries, ",");

split("example.com,test.org,demo.net,sample.io", domains, ",");

split("Chrome,Firefox,Safari,Edge,Opera", browsers, ",");

split("Windows,macOS,Linux,Android,iOS", oss, ",");

split("Desktop,Laptop,Tablet,Mobile", devices, ",");

split("Google,Facebook,Twitter,LinkedIn,Direct,Email", sources, ",");

split("Free,Basic,Pro,Enterprise", plans, ",");

for(i=1;i<=rows;i++) {

name = names[int(rand()*length(names))+1];

surname = surnames[int(rand()*length(surnames))+1];

gender = genders[int(rand()*length(genders))+1];

age = 18 + int(rand()*63);

city = cities[int(rand()*length(cities))+1];

score = sprintf("%.2f", rand()*100);

code = int(rand()*99999) + 10000;

status = (rand() > 0.7) ? "Suspended" : ((rand() > 0.5) ? "Active" : "Inactive");

day = sprintf("%02d", 1 + int(rand()*28));

date = "2025-" sprintf("%02d", 1 + int(rand()*12)) "-" day;

email = tolower(name) i "@" domains[int(rand()*length(domains))+1];

country = countries[int(rand()*length(countries))+1];

verified = (rand() > 0.5) ? "true" : "false";

phone = sprintf("+1-%03d-%03d-%04d", int(rand()*900+100), int(rand()*900+100), int(rand()*10000));

ip = int(rand()*255) "." int(rand()*255) "." int(rand()*255) "." int(rand()*255);

browser = browsers[int(rand()*length(browsers))+1];

os = oss[int(rand()*length(oss))+1];

device = devices[int(rand()*length(devices))+1];

source = sources[int(rand()*length(sources))+1];

plan = plans[int(rand()*length(plans))+1];

referral = sprintf("REF_%04d", int(rand()*10000));

printf "%d,%s,%s,%s,%d,%s,%s,%d,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s\n",

i, name, surname, gender, age, city, score, code, status, date, email, country, verified,

phone, ip, browser, os, device, source, plan, referral;

}

}' >> "$FILENAME"

echo "CSV file with $NUM_ROWS rows generated: $FILENAME"

FILE_SIZE=$(du -h "$FILENAME" | cut -f1)

echo "File size: $FILE_SIZE"

#Assign execution permissions

chmod +x generate.sh

#Example to execute with number of lines. Default value 1000000

./generate.sh 10000000

And when we run it we have a similar output:

NOTE : Depending on the number of rows and the power of the computer, this script can take a long time.

Conclusion

Processing huge files in Go doesn’t have to be hard. Using Go’s concurrency and memory reuse features, you can handle big CSVs or text files efficiently without using too much memory. This makes your programs faster and more reliable, especially when dealing with large datasets

Repository

The code for this tutorial can be found in the public: GitHub - albertcolom/go-file-processor

Original published at: albertcolom.com

Top comments (0)