About Author

Hi, I'm Sharon, a product manager at Chaitin Tech. We build SafeLine, an open-source Web Application Firewall built for real-world threats. While SafeLine focuses on HTTP-layer protection, our emergency response center monitors and responds to RCE and authentication vulnerabilities across the stack to help developers stay safe.

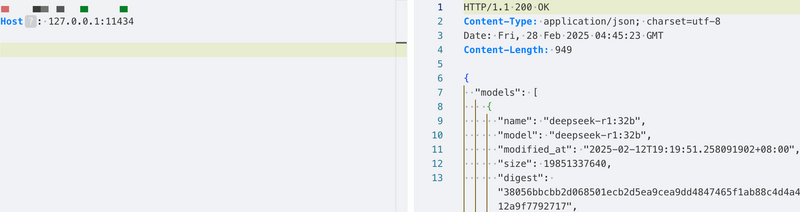

Ollama is an open-source runtime and toolkit for deploying large language models (LLMs) like DeepSeek. It's meant to make it easy for developers to run and manage LLMs locally or in production environments.

However, a serious security risk has been identified: if Ollama's default port (11434) is exposed to the internet without any authentication mechanism, attackers can access sensitive API endpoints without permission. This could lead to data theft, resource abuse, or even full server compromise.

Vulnerability Overview

Root Cause

By default, Ollama only listens on 127.0.0.1, allowing access only from localhost. But many users change this to 0.0.0.0 to enable remote access — and without authentication, this exposes internal API endpoints to the public internet.

Some older versions (e.g. prior to v0.1.34) also include additional security flaws, such as a path traversal issue in the /api/pull endpoint (CVE-2024-37032), which can allow arbitrary file overwrite and remote code execution.

Real-World Risks

- Data Leakage: Attackers can pull private models and internal data using unauthenticated API calls.

-

Resource Abuse / DoS: APIs like

/api/generateand/api/pullcan be spammed, consuming CPU, bandwidth, or disk space. - Configuration Hijack: Exposed endpoints can be used to alter server state or settings.

- Chained Exploits: Attackers may combine this issue with other vulnerabilities to take full control of the host.

This issue has already been seen exploited in the wild.

Affected Versions

All Ollama versions are affected if the service is bound to 0.0.0.0 and lacks authentication.

Some related vulnerabilities (e.g. CVE-2024-37032) were fixed in version v0.1.34. Users should update immediately.

Mitigation Guide

1. Restrict Public Access

Avoid exposing Ollama to the internet unless absolutely necessary. Keep it on 127.0.0.1 or restrict access via a VPN or internal network.

2. Use Access Control

Set up firewall rules, cloud security groups, or network ACLs to restrict port 11434 to trusted IPs only.

3. Enable Authentication (via Reverse Proxy)

Since Ollama doesn’t yet support built-in auth, use a reverse proxy like NGINX with basic auth or OAuth.

Example NGINX Config

location / {

proxy_pass http://localhost:11434;

auth_basic "Ollama Admin";

auth_basic_user_file /etc/nginx/conf.d/ollama.htpasswd;

}

Other tools like Caddy, Apache, or API gateways can also be used.

Reproduction

Timeline

- Feb 27, 2025 – Vulnerability reproduced by Chaitin Security Lab

- Feb 28, 2025 – Public advisory released

Final Thoughts

If you’re running Ollama and have made it internet-facing — act now. Either take it offline or secure it with proper network controls and authentication. Don’t let your private models or compute resources be hijacked by attackers.

Top comments (0)