🧠 The AI Boom and the Surge in Web Crawlers

With the advent of AI tools like ChatGPT, there's been a noticeable uptick in web crawling activities. These AI models often source their information from various technical forums and websites, leading to increased scraping of valuable content. This phenomenon has raised concerns among web administrators about unauthorized data extraction and bandwidth consumption.

🛡️ Why Traditional Anti-Bot Tactics Fall Short

Most websites rely on basic defenses:

-

robots.txtto politely ask bots to back off (they don’t) - User-Agent filtering

- Referer checks

- Rate limiting by IP

- Cookie-based access

- JavaScript-based obfuscation

Unfortunately, modern scrapers walk right through these. Here's how:

| Technique | How Bots Bypass It |

|---|---|

| User-Agent filtering | Fake headers |

| Referer checks | Fake headers |

| Rate limiting | Rotate proxies/IPs |

| Cookie checks | Steal/clone cookies |

| JS obfuscation | Use headless browsers |

It's a game of cat-and-mouse—and the bots are getting better.

🔐 Advanced Bot Protection with SafeLine WAF

SafeLine WAF introduces a multi-faceted approach to combat modern web crawlers:

1. Request Signature Binding

Each client session is bound to specific attributes like IP, User-Agent, and browser fingerprint. Any alteration leads to session invalidation.

2. Behavioral Analysis

By monitoring user interactions such as mouse movements and keystrokes, SafeLine distinguishes between human users and bots.

3. Headless Browser Detection

Identifies and blocks requests from headless browsers commonly used in automated scraping.

4. Automation Control Detection

Detects browsers under automation control (e.g., via Selenium) and restricts their access.

5. Interactive Challenges

Implements CAPTCHAs and other challenges to verify human presence.

6. Computational Proof-of-Work

Introduces tasks that require computational effort, deterring bots by increasing their operational costs.

7. Replay Attack Prevention

Employs one-time tokens and session validations to prevent request replays.

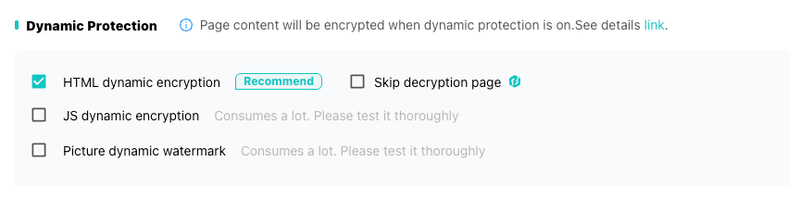

8. Dynamic HTML and JS Encryption

Encrypts and obfuscates HTML and JavaScript code, making it difficult for bots to parse and extract meaningful data.

⚙️ Implementing SafeLine WAF

Setting up SafeLine WAF is straightforward:

- Installation: Follow the official SafeLine WAF Documentation for installation steps.

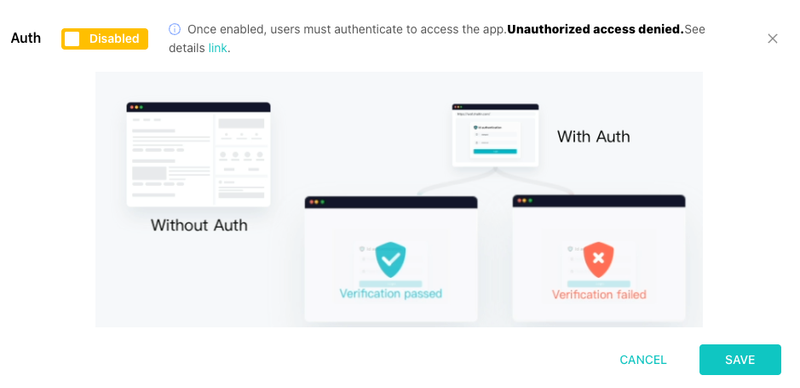

- Configuration: Enable anti-bot features through the user interface.

- Monitoring: Use the dashboard to monitor traffic and bot activity.

Once configured, legitimate users will experience minimal disruption, while malicious bots will be effectively blocked.

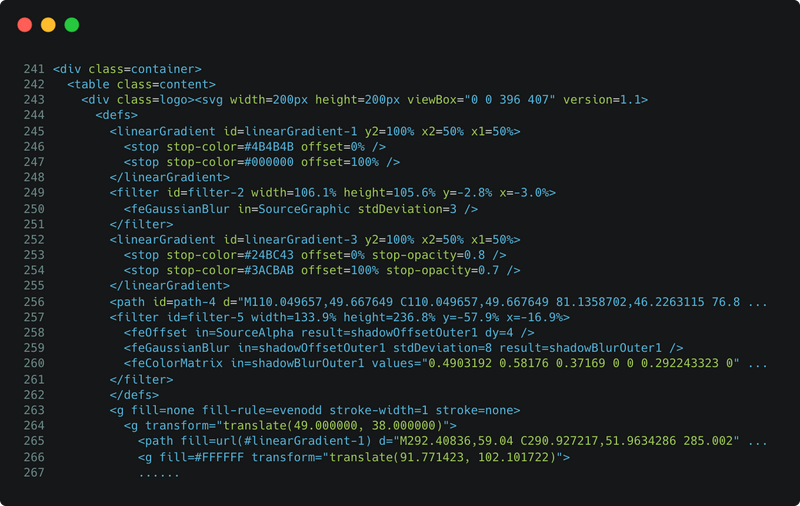

🌍 Real-World Impact: HTML Before & After SafeLine

When a site is protected by SafeLine, the HTML and JS are dynamically encrypted. Even though it’s the same page, every reload results in a different structure. Here's what that looks like:

Original HTML (Server-side):

Browser HTML After SafeLine Protection:

This isn’t just obfuscation. Every page load gets a unique DOM and script structure, making it extremely difficult for bots to parse or reuse.

Cloud-Powered Human Verification

SafeLine’s human verification is powered by a cloud-based API from Chaitin. Each verification call leverages:

- Real-time IP threat intelligence

- Rich browser fingerprint data

- Behavior-based bot detection algorithms

The result? Over 99.9% bot detection accuracy.

And because the algorithms and JavaScript logic are continuously updated in the cloud, even if a sophisticated attacker cracks the current version, they’re only cracking an outdated one—we're always one step ahead.

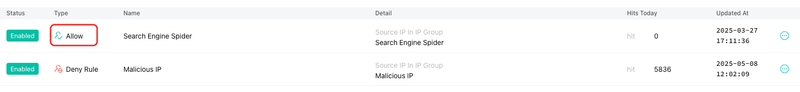

🔍 SEO Considerations

Concerned about search engine indexing? SafeLine WAF allows you to whitelist known search engine crawlers, ensuring your site's SEO remains unaffected.

🤝 Join the Community

Interested in discussing bot protection strategies? Join the SafeLine WAF community:

Top comments (3)

It’s not every day you find a marketplace that feels like it was actually built with the community in mind. If you’ve ever tried to sell your CS:GO skins and ended up frustrated by low prices, hidden fees, or clunky interfaces, you’ll appreciate how refreshing Avan Market is. This platform manages to strike a rare balance between simplicity, security, and fairness, which makes it a go-to choice for players who value their time and digital inventory avan.market/sell/csgo .One of the most noticeable aspects of using Avan Market is how intuitive the process is. You don’t need to be a tech expert or spend hours figuring things out. The interface is sleek, straightforward, and designed to help you get your skins listed and sold without unnecessary complications. Whether you’re selling a high-tier knife or just unloading some extras from your inventory, the system works smoothly.What really sets Avan Market apart, though, is its pricing. Unlike many other platforms that seem to eat away at your profits with high fees or forced discounts, this site is structured to keep things transparent. You set your price, you get your earnings—it’s that simple. This fairness has built a lot of trust within the community, and it’s clear that the platform values long-term relationships over short-term gains.Another underrated but powerful feature is how fast transactions are processed. You don’t have to wait days for things to move. The system is optimized for speed, so once your item is sold, the payout is handled quickly. For anyone who’s sold skins elsewhere and waited too long for confirmation or payment, this improvement is a breath of fresh air.

When defending against AI-driven scrapers, fast incident response and timing are critical—particularly when adjusting WAF rules or deploying new filters at scale. That’s where a tool like Time Calculators comes in handy, helping teams stay synchronized and proactive in their defenses.

This post effectively outlines the rise in web crawling due to AI tools and the limitations of traditional bot defenses. It introduces a modern web protection system that uses advanced techniques like behavioral analysis, headless browser detection, and dynamic HTML/JS encryption. Its cloud-based, continuously updated approach ensures adaptability against evolving threats. SEO concerns are addressed through selective bot access. However, visuals showing HTML changes are missing, and performance impacts aren’t discussed. Including a comparison with similar solutions would strengthen its value proposition. Overall, it’s a compelling overview of next-generation bot protection in the age of AI-driven content scraping.